Like many things on the internet, deepfakes started with porn before making their way into the mainstream. As people trained A.I. to replace (fully clothed) people’s faces with mimics of others, the results went viral. Nic Cage as Amy Adams, Steve Buscemi as Jennifer Lawrence, and Jordan Peele posing as Barack Obama all rang the alarm on deepfakes. As Peele-as-Obama put it: “We’re entering an era in which our enemies can make it look like anyone is saying anything at any point in time, even if they would never say those things.”

Now, it appears we’ve reached a full-on deep-fake panic. “Top A.I. researchers race to detect ‘deepfake’ videos: ‘We are outgunned,’ ” reads one Washington Post headline. Other stories worry that deepfakes present a threat to democracy and could damage the 2020 election. Tech companies have a big problem on their hands as they try to figure out how to manage the spread of these videos online. So far, Facebook has refused to remove a slowed-down video of House Speaker Nancy Pelosi that made her appear drunk, as well as a doctored video appearing to show CEO Mark Zuckerberg gloating about his “total control of billions of people’s stolen data.”

That video was created by two artists, Bill Posters and Daniel Howe, who worked with ad agency Canny. It appeared as part of an exhibition called Spectre at the U.K.’s Sheffield Doc Fest, and was also meant a test of Facebook’s censorship: If the company wouldn’t take down the manipulated video of Pelosi, would it act when its founder and platform were being smeared?

So far, the answer seems to be no. But if we’re waiting for tech giants to develop consistent standards to manage deepfakes, we might as well be waiting for Godot. Recent deliberations like YouTube’s flip-flopping on allowing homophobic content and Twitter’s inquiry into banning white supremacists indicate how difficult it is for these huge companies to grapple with questions of free speech from users (and, in many cases, the ad revenue and data they generate) versus the rights of other users to exist online, free from harassment. And as Tiffany C. Li wrote in Slate earlier this month, copyright law probably won’t do much to eradicate deepfakes, either, given that many popular deepfakes could qualify as “fair use,” exempting them from copyright infringement.

Until researchers figure out how to reliably reverse-engineer a deepfake, videos like the ones in Spectre are perhaps the best defense we have against the dangerous misinformation fake videos can spread. The exhibition, which included fake videos of Kim Kardashian and Donald Trump, provided “an opportunity to educate the public on the uses of A.I. today, but also to imagine what’s next,” Canny co-founder Omer Ben-Ami told Motherboard. Viewers immediately recognize the subjects in the video, but what they say is clearly fishy: Fake Kardashian talks about how she doesn’t care about her haters because their data has made her “rich beyond my wildest dreams,” and Trump’s voice, which is slightly off but still convincing enough, says his popularity comes from “algorithms and data.”

Commenters on Twitter, YouTube, and Instagram have mocked the videos for being unconvincing. But identifying what doesn’t work about these videos is a form of media literacy. These sorts of videos offer us subtle practice in recognizing what makes something real and fake. “The message was too focused and succinct,” one commenter wrote on the Trump video. (I also can’t recall ever hearing Trump use the word algorithm.) On the Kardashian video, several commenters say they’re impressed with how convincing the video is, but one commenter points out that fake Kardashian’s voice, facial movements, and the video’s sound are “off.” If you look very closely, rendered Kardashian’s lips distort ever so slightly when she moves her head while talking. It’s extremely subtle, but still just enough of a glitch that you might suspect something is not quite right about this video.

If the concern is spread of misinformation, perhaps we can look to deepfakes’ static predecessor—Adobe Photoshop—for clues about how to combat it. “Which photograph….is the real thing? No one knows for sure, in the age of Photoshop,” reads the subheadline on a 2004 Salon article by Farhad Manjoo. One photojournalist interviewed for the piece “worries that the truth we see in photographs will diminish in a digital age,” writes Manjoo. “He has two nightmares: First, that fake pictures will be mistaken for true pictures, rattling the political discourse. But a scarier proposition for him is that, in the long run, people will start to ignore real pictures as phonies.”

That photojournalist wasn’t wrong. There have been countless Photoshop scandals in the 15 years since, and people do often question whether pictures are real. We also have yet to figure out a way to definitively identify whether a photo has been doctored. Just last week, some researchers announced a new tool that identifies modified photos, but only if those changes were made using Adobe software, which goes to show how far we are from a universal solution.

In the absence of a technology-driven way of identifying fakes, we’ve grown savvier as a society. We have collectively accepted that photos can be and now often are edited, and approach images with a dose of skepticism. There are now entire subreddits and Instagram accounts where people post, analyze, and make fun of bad photoshops. Tools like Instagram, Photoshop, and Facetune allow anyone to play around with photos, from changing lighting and filters to nipping and tucking body parts. In this direct experience with spotting edited photos (especially when they’re side by side with the original) and manipulating them directly, we’ve learned how easy it is to make these tweaks, and how convincing the end result can be. “This looks ‘shopped! I can tell from some of the pixels, and from seeing quite a few ‘shops in my time,” goes the old meme—but there’s some truth to it. As we actually have seen quite a few ‘shops in our time, we’ve begun to see the telltale signs of digital doctoring: warped or distorted backgrounds, mismatched shadows, and peculiar lighting. And, perhaps more importantly, we’ve come to understand that anything could be ’shopped.

This is a type of media literacy similar to Dakota State English professor William Sewell’s “Lie-Search presentation” unit, a method he details in a 2010 paper published in the Journal of Media Literacy Education. He asks his students to “create or re-tell a very elaborate and very believable hoax”—a few highlights include a “special news report describing how doctors can cure cancer with specially fermented cheese,” which “had the look and feel of an episode of ‘Dateline’ ” and how “a banjo-playing Hitler invaded the Soviet Union because of a failed romance with Stalin.” Students are encouraged to incorporate videos, photos, and a compelling soundtrack “in order to generate authenticity of the lie,” to make up statistics or whatever else they need to sound convincing, and present a list of works cited at the end of their presentation for added credibility. After students present, the class gets into dissecting the multimedia and faux-factual elements that made these fake stories convincing, and what to be skeptical of in the future. Among those things is a demonstration of reverse Photoshopping, but there’s also simple direct experience with seeing how easy it is to make stuff up convincingly, and the right questions to ask when something seems too wild to be true.

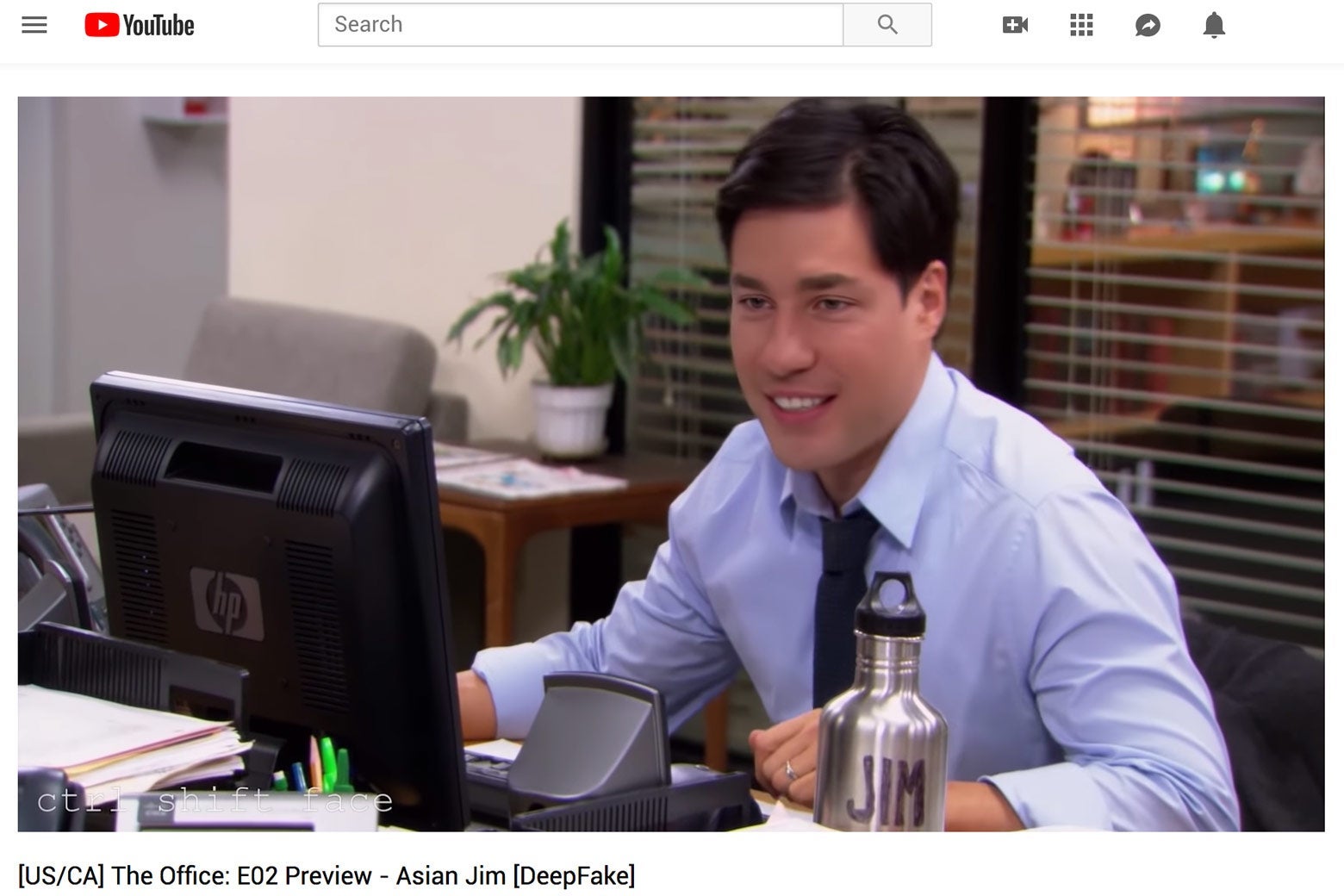

The deepfakes we’re watching for fun are, intentionally or not, serving that same purpose. The subreddit r/GifFakes has hundreds of examples of deepfakes, all of varying quality, but after watching a dozen or so, you learn to spot the giveaways: face discolorations, lighting that isn’t quite right, badly synced sound and video, or blurriness where the face meets the neck and hair. And seeing really convincing fakes in contexts you know in advance are fake serves as a good reminder how powerful A.I. can be. The other day, a friend sent me this extremely well-done deepfake from an old episode of The Office.

If you didn’t watch the series, one recurring theme is that Jim (played by John Krasinski) constantly plays pranks on his dweeby co-worker Dwight (played by Rainn Wilson). In this scene, an Asian man (played by Randall Park) comes into the office claiming he’s Jim (Krasinski is white), then tries to convince Dwight that he’s actually been Asian the entire time. In the fake, Park and Krasinski’s faces are seamlessly morphed, and I had trouble finding any cracks in the façade, despite having seen the original clip dozens of times. (My editor, on the other hand, pointed out that the morphed Park/Krasinski face was maybe a bit too large for its head, and upon another watch, I can see it.) The video’s creator, Ctrl Shift Face, has also made around a dozen other convincing fakes, like swapping in Sylvester Stallone’s face for Arnold Schwarzenegger’s in scenes from the Terminator series, or subbing Edward Norton’s face in for Brad Pitt’s in Fight Club.

On his account, Ctrl Shift Face says he’s not allowed to monetize his YouTube channel due to copyright claims, so he’s created a Patreon account instead. This may serve primarily as an income generator for the creator to keep putting time into these videos, but it’s also among the best deepfake learning tools out there for casual consumers of the genre. Patrons who pay $5 a month get to see behind the scenes features, like one comparing the deepfake to the original video and a timelapse showing the results as the A.I. was being trained. Ten dollars a month unlocks downloads of the original training data sets.

Peering behind the curtain to see how fakes are made and picking them apart on social media may be closest thing to a deepfake version of Sewell’s “lie-search” exercise. And as the arms race between digital doctoring and detection of the doctoring drags on, humans’ common sense and critical-analysis faculties are the best tool we have in the fight against misinformation.

Future Tense is a partnership of Slate, New America, and Arizona State University that examines emerging technologies, public policy, and society.